All About Ampere

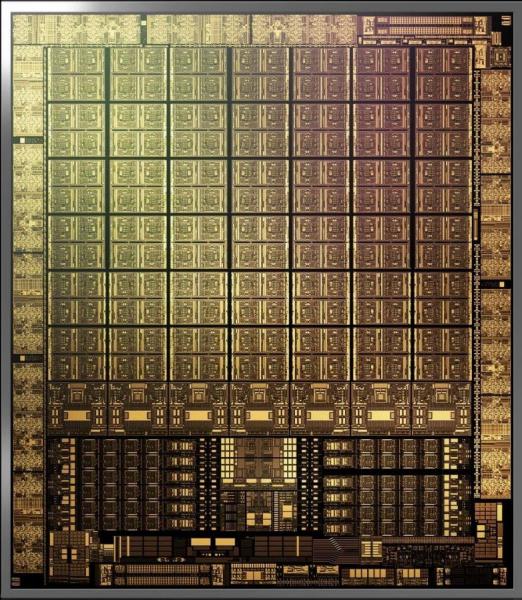

Nvidia Ampere Architecture SM

The NVIDIA Ampere architecture is a giant leap in performance. So much has gone into it to make it the world’s fastest GPU. Fabricated on Samsung’s 8 nm NVIDIA custom process and packing 28 billion transistors, the NVIDIA Ampere architecture features an improved streaming multiprocessor (SM), second-generation Ray Tracing cores for improved ray tracing hardware acceleration, third-generation Tensor Cores for increased AI inference performance, and DLSS improvements that allows for 8K gaming, and brand new GDDR6X memory—the fastest graphics memory ever made. Let’s quickly discuss all of this.

The NVIDIA Ampere SM

The NVIDIA Ampere architecture Streaming Multiprocessor (SM) is the building block of the GPU, and it’s full of different Cores and Units and memory. One of the big changes in the NVIDIA Ampere architecture SM is with 32-bit floating-point (FP32) throughput. We doubled it. To accomplish this, we designed a new datapath for FP32 and INT32 operations, which results in all four partitions combined executing 128 FP32 operations per clock. Does this help gaming? Yes. Graphics and compute operations and algorithms rely on FP32 executions, and so do modern shader workloads. Ray tracing denoising shaders benefit from FP32 speedups, too. The heavier the ray tracing rendering workload, the bigger the performance gains relative to the previous generation.

Three Processors in One

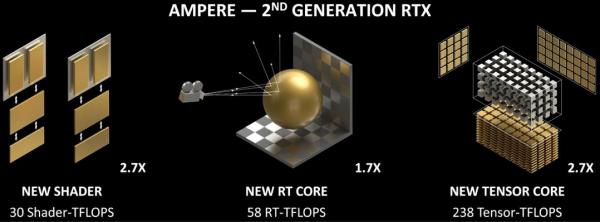

The RTX GPU has three fundamental processors—the programmable shader that we first introduced over 15 years ago, RT Core to accelerate the ray-triangle and ray-bounding-box intersections, and an AI processing pipeline called Tensor Core. Turing introduced the three processors for gamers, laying the foundation for the future of real-time ray tracing in games and the move away from rasterization, a technology invented in 1974. Ray tracing brings a level of realism far beyond what was possible using rasterization. But it’s extremely demanding to render. To support the next generation of ray tracing, all three processors required innovation, and as you can see below, the NVIDIA Ampere architecture delivers innovation for all three.

- Programmable Shader: Increased to 2 shader calculations per clock versus 1 on Turing—30 Shader-TFLOPS compared to 11 TFLOPS.

- 2nd Generation RT Core: Ray-triangle intersection throughput is now doubled so that the RT Core delivers 58 RT-TFLOPs, compared to Turing’s 34.

- 3rd Generation Tensor Core: New Tensor Core automatically identifies and removes less important DNN weights and the new hardware processes the sparse network at twice the rate of Turing—238 Tensor-TFLOPS compared to 89 TFLOPS non-sparse.

The 3rd Generation Tensor Core

What does a Tensor Core do? You can think of it as the AI brains on GeForce RTX GPUs. Tensor Cores accelerate linear algebra used for deep neural network processing—the foundation of modern AI. New third-generation Tensor Cores accelerate AI features such as NVIDIA DLSS for AI super-resolution and the NVIDIA Broadcast app for AI-enhanced video and voice communications.

Boost Your Framerates with DLSS

Deep Learning Super Sampling (DLSS), first introduced in the Turing architecture, leverages a deep neural network to extract multidimensional features of the rendered scene, and intelligently combine details from multiple frames to construct a high-quality final image that looks comparable to native resolution while delivering higher performance. Essentially, the Tensor Cores allow DLSS to speed up your game, all while providing comparable images—sometimes even more detailed images.

DLSS is further enhanced on NVIDIA Ampere architecture GPUs by leveraging the performance of the third-generation Tensor Cores.

The side-by-side images above from Death Stranding, the latest game by Kojima-san, show the improvements: DLSS is sharper than native 4K, and it created detail from AI that native rendering didn’t show. And… the frame rate is higher.

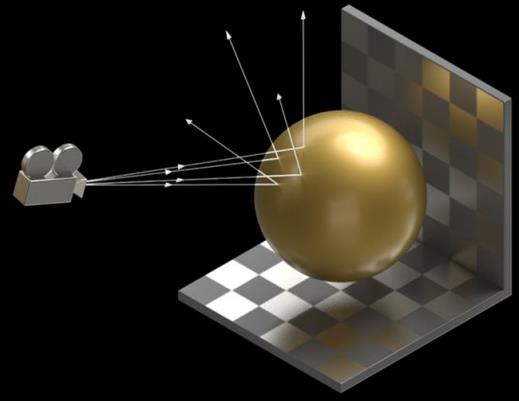

The 2nd Generation RT Core

Turing made quite the splash when it brought real-time raytracing to the gaming world, bringing realistic lighting, shadows, and effects to games; and enhancing image quality, gameplay, and immersion beyond what we could imagine.

NVIDIA Ampere architecture’s second-generation ray-tracing cores have doubled down, bringing 2x

the throughput of Turing’s first-generation ray tracing cores, plus concurrent ray tracing and shading for a whole new level of ray tracing performance. In other words, the more raytracing that is done, the greater the speed-up!

The NVIDIA Ampere architecture RT Core doubles ray-intersection processing. Its ray tracing is processed concurrently with shading.

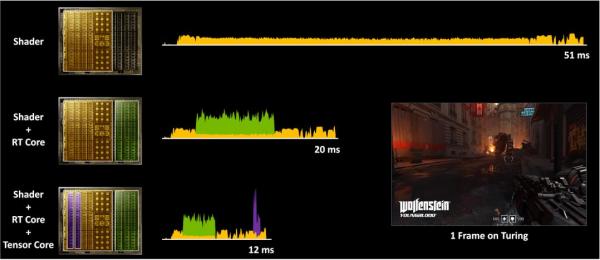

RTX Processors in Action

Ray Tracing and shader work is really demanding. It would be expensive to run everything on shaders alone. That’s why we offload work onto our specialized cores, and why we designed them to run in parallel. By utilizing our three different independent processors concurrently, we speed up processing overall. Let’s look at one frame trace of a game to see the RTX processors in action.

On Turing architecture (RTX 2080 Super), it takes 51 ms to run this single frame of ray-traced Wolfenstein: Youngblood gameplay on the shaders. But when we dedicate the Ray Tracing work on the RT Cores and run the work concurrently, the frame renders at a much faster 20 ms. But that’s still only 50 fps. We’re not done yet. Utilizing our Tensor Cores by enabling DLSS reduces the frametime to just 12 ms. You can see how powerful dedicated concurrent processing is.

So how have we improved this on the NVIDIA Ampere architecture? We’ve made everything faster, with our 2x FP32 and L1 cache SM advancements, and with our 2nd generation RT Cores and 3rd generation Tensor Cores. When the same single frame is run on the RTX 3080, this 2nd generation concurrency results in a frametime of only 6.7 ms. That’s a massive 150 fps. This type of efficiency is at the heart of our engineering.

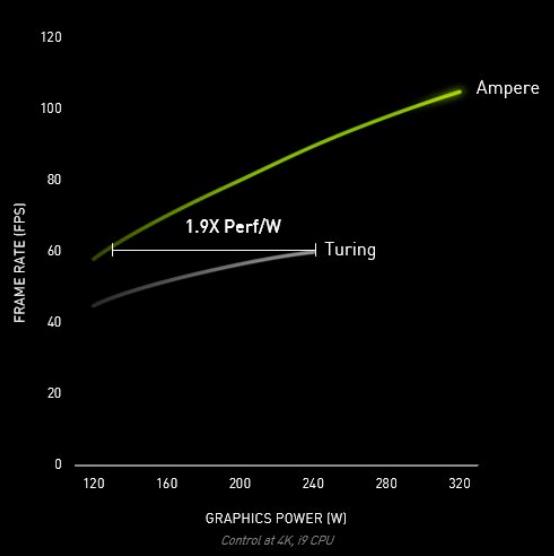

Performance Per Watt

That’s not all the efficiency you’ll find in the RTX 30 Series, either. The entire NVIDIA Ampere architecture is crafted for

efficiency—from the custom process design to circuit design, logic design, packaging, memory, power, and thermal design, down to the PCB design, and the software and algorithms. We squeezed every bit of performance we could.

At the same performance level, the RTX 30 Series is 1.9x more power-efficient than Turing. See our testing addendum document to learn how to test this for yourself.

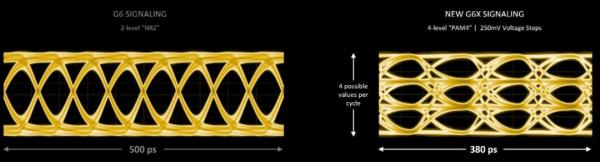

GDDR6X Memory

It’s only right that we pair the world’s fastest GPUs with the world’s fastest graphics memory. Made to deliver the best gaming performance, the RTX 3080 is equipped with 320-bit GDDR6X memory with blazing fast speeds of 19 Gbps, bringing improvements of over 40% when compared to Turing.

We worked with Micron to design GDDR6X with PAM4 signaling (Pulse Amplitude Modulation). PAM4 signaling is a big upgrade from the 2-level NRZ signaling on GDDR6 memory—instead of binary bits of data, PAM4 sends one of four different voltage levels, in 250 mV voltage steps, every clock cycle. So in the same period of time, GDDR6X can transmit twice as much data as GDDR6 memory.

Data-hungry workloads, such as AI inference, game ray tracing, and 8K video rendering, can now be fed data at high rates, opening new opportunities for computing and end-user experiences.

So how did we do it?

Our new flagship NVIDIA Ampere architecture gaming GPU innovates on everything that was invented and introduced in Turing, providing the greatest generational leap in graphics performance. Every aspect of this 2nd generation RTX GPU architecture has been improved.

29.8 Shader-TFLOPS (Peak FP32)

59.5 RT-TFLOPS (Peak FP16 Tensor)

89.3 Total TFLOPS for Ray Tracing!

11.2 Shader-TFLOPS (Peak FP32)

44.6 RT-TFLOPS (Peak FP16 Tensor)

55.8 Total TFLOPS for Ray tracing

Let’s look at the numbers: RTX 3080 has 8704 CUDA cores—over twice the CUDA cores that the RTX 2080 Super has. It delivers 30 TFLOPs of shader performance plus dedicated RT Cores with the equivalent 58 TFLOPs of performance, for a total of 88 effective TFLOPs of performance while ray tracing. This is 2x the effective TFLOPS of RTX 2080 Super, which was introduced a little over a year ago.

But it’s not just about innovating on existing technologies. It’s the first GPU to use GDDR6X, the world’s fastest graphics memory running at speeds of 19 Gbps. 10GB of this connected to a fat 320-bit memory interface delivers up to 760 GB/sec of memory bandwidth! All of this combined allows RTX 3080 to deliver the biggest generational performance gains ever.

And more firsts:

HDMI 2.1 — GeForce RTX 30 Series GPUs are the first available to feature HDMI 2.1 support, for single cable output on 8K HDR TVs. This is critical for 8K HDR 60 FPS gaming since you need at least 71.66 Gbps before compression. This was not possible on TVs using HDMI 2.0b. Previous generation GPUs had HDMI 2.0b (18.1 Gbps) or DisplayPort 1.4a (32.4 Gbps), so an 8K TV would require 4 HDMI 2.0 cables, or a specialized DP-to-HDMI 2.1 converter, making the setup more complex and expensive. Now, one HDMI 2.1 cable simplifies connection for 8K HDR gaming.

8K HDR Capture — Available with the launch of RTX 3080, the latest update to GeForce Experience delivers the first-ever software capture solution capable of 8K HDR recording. GeForce Experience can now capture up to 8K 30 FPS and support HDR capture at all resolutions, so you no longer need to buy a separate and expensive capture card for 8K HDR. You can also simply select Manual or Instant Replay from within the GeForce Experience overlay, record 8K 30 FPS HDR content, and share directly to YouTube.

AV1 Decode — Traditionally, 8K streaming using H.264 required up to 140 Mbps of internet bandwidth for smooth real-time playback. With new AV1 decoding, bandwidth is reduced by more than 50%. However, even high-end CPUs struggle to keep up with AV1 decode. With AV1 GPU hardware acceleration on RTX 30-Series GPUs, users can consume up to 8K60 HDR content in AV1 seamlessly from YouTube.

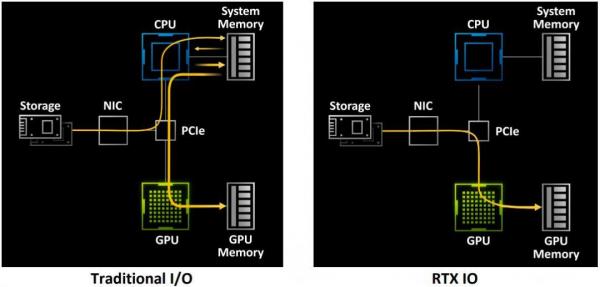

RTX IO: More Gaming, Less Waiting

PC storage solutions are entering a new era with the launch of blazing-fast Gen4 PCIe NVMe SSDs, capable of read speeds up to 20x faster than drives released just a few years ago. To make full use of these speeds, developers and game engines are working on advanced streaming and loading solutions that will allow the creation of larger worlds with less loading, less pop-in, and more detail.

Gamers face problems with traditional I/O models that use file systems and APIs designed for generic computer use because these older I/O models are inefficient both in terms of performance and CPU utilization. Mechanical hard drives with 50 – 100MB/sec speeds are way too slow for gaming and streaming now, which is why enthusiasts have moved to faster Gen4 SSDs capable of up to 7GB/sec. But even these faster SSDs are prohibited by the traditional I/O subsystems, and now these API’s are the bottleneck.

Developers combat I/O limitations by using lossless compression to reduce install sizes, which in turn improves I/O performance. Unfortunately, performing these techniques with Gen4 SSD data rates can consume tens of CPU cores, which is well beyond what gaming PCs can provide today. Not only will it limit I/O performance, it would impact next-generation streaming systems that large, open-world games need.

To support these developments, NVIDIA created RTX IO. RTX IO is a super-efficient API requiring game developer integration and delivers GPU-based lossless decompression, designed specifically for game workloads. By decompressing on the GPU, we can offload the CPU and provide enough decompression horsepower to support even multiple Gen4 SSDs. This can enable I/O rates more than 100 times that of traditional hard drives, and reduce CPU utilization by 20x.

Just like we did with RTX and DirectX Raytracing, we are partnering closely with Microsoft to make sure RTX IO works great with DirectStorage on Windows. By using DirectStorage, next-gen games will be able to take full advantage of their RTX IO enabled hardware to accelerate load times and deliver larger open worlds, all while reducing CPU load.

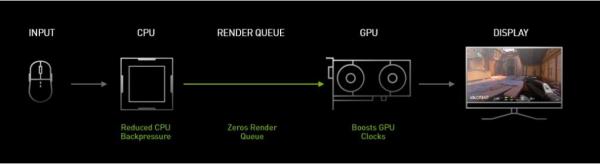

NVIDIA Reflex: Low Latency Technology

Acquire targets faster, react quicker, and increase aim precision through a revolutionary suite of new GeForce and G-SYNC technologies to optimize and measure system latency in competitive games.

NVIDIA Broadcast for AI-Powered Video and Voice

NVIDIA GeForce RTX 30 Series includes the best GPU hardware encoder—NVIDIA Encoder—enabling you to stream at max FPS and high quality. But that’s not all. You can now transform any room into a home broadcast studio—taking your livestreams and video chats to the next level with AI-powered audio noise removal, virtual background effects, and webcam auto frame. With Tensor Cores accelerating AI processing on GeForce RTX GPUs, the AI networks are able to run high-quality effects in real-time. Check out the NVIDIA Broadcast Quick Brief (coming soon) for more information!

NVIDIA Studio: RTX-Accelerated Content Creation

Backed by the NVIDIA Studio platform of dedicated drivers, SDKs, and GPU-accelerated creative apps, RTX 30 Series brings faster rendering and AI performance, more graphics memory, and exclusive NVIDIA AI tools such as Omniverse Machinima to help you finish creative projects in record time.

Check Out Our Amazon Store with Modders Inc

Please Support PCTestBench as Every Purchase Helps